Scaling Microservices

Traditional monoliths based on service oriented architectures (SOA) can scale but more often than not doing so is more complext than if the same system was implemented with a microservice architecture. In this post, I will discuss the three ways to scale applications and how microservice architectures can be employed to help simplify application architectures in a devops environment. I’ve previously discussed microservices in the past and you can read that here.

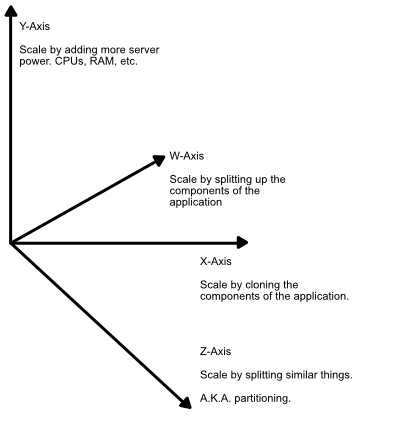

The Four Axes of Evil

As expected there are multipled ways of scaling an architeture. In our case, four ways have been identified which lend themselves nicely to application scalablity depending on the situation. Each varies in complexity and scalability so it pays to have at least some idea of how the application will grow over time.

Note: I’ve modified the Scale Cube model as described in the booked The Art of Scalability (Abbot & Fisher, Addison-Wesley Professional, 2015).

W Axis - Application Splitting AKA Microservice Architecture

Otherwise known as a microservice architecture, the architectural complexity associated with this strategy is reasonably high as it requires a good of understinding of the domain and careful thought around the contextual boundaries of the application. Moreover, development complexity can be high as developers must coordinate multiple mini applications - each with it’s own specific functionality - to form the overall application. To hide the underlying structure of the application from the outside world, requests are typically sent to a reverse proxy (for example, NGINX) which routes the requests to the relvant microservice.

There are several advantages to application architecture when deployed inside containers such as Docker. Because this architecture is inherently smaller, more maintainable and more decoupled, multiple teams can work in parallel and with autonomy on their own services. It better supports the continuous delivery and deployment of large, complex applications because each service is independently deployable and more easily testable. From a scaleability perspective, data is typically local to each microservice so individual services of the application can be horizontally scaled based on their application load.

The challenge with this architecture is that it introduces complexity. This makes initial development slower and the design decision early on can have a lasting impact. What’s clear is that for small applications this architecture is too much so the difficult question becomes when to actually implement this architecture in the prject lifecycle. The biggest challenge with this architecture is knowing which services to implement and the boundaries of each service. As aggregate boundaries change as better understanding of the project domain is gained refactoring in this situation can be difficult. The bottom line here is that adopting this strategy should not be a decision taken lightly.

X Axis - Horizontal Scaling AKA Application Cloning

This sees multiple instances of the application running on seperate servers all sitting behind a load balancer. In terms of complexity, this is the second easiest to implement after vertical scaling. The challenge is managing application state across the cluster however architecturally this is made relatively straight forward with the advent of in memory caches such as Redis and MemCache. That is, a memory cache is shared between the nodes in the cluster regardless of which node the client is accessing.

The load balanacer can be either hardware or software based. Either way, the load balancer distributes traffic to the nodes based on a number of strategies such as number of incoming requests or current server load.

Y Axis - Vertical Scaling AKA More Powerful Servers

With this strategy, the whole application runs in the same process which reduces application complextiy, increases development speed and facilitates simpler state management. Without a doubt this is the easiest strategy of all. Simply run beefier servers - more RAM, Faster/More CPUs, Faster hard disks, etc.

There are three main drawbacks with this strategy. Firstly, the application relies on a single point failure (the server) and if it goes down, then the only way to recovefr is to restart the server or replace parts. Secondly, individual servers can only scale up to a point before they run out of extensibility. That is, you will get to the point where there is no hardware that can accomodate you. Third and finally, big servers are specialised and expensive - often running into the 10s or 100s of thousands of euro - thus being uneconomical and impractical. It may be advisable to reserve this strategy for simple applications, legacy systems and database servers. Where possible, the question must be asked if it makes sense to re-engineer the application to support horizontal scaling - an often risky and difficult journey.

Z Axis - Partitioning

Partitioning is typically used to scale databases. In this strategy, data is split between servers or tables so that each server deals with only one subset of the entire set of data. With smaller sets of data, cache utilization is improved memory usage is reduced and disk I/O is reduced. Fault tollerance is improved as failures only make part of the data in accessible rather than the whole. As an alternative to vertical scaling, this may be attractive when the data sets are large.

Obviously, the big drawback is the higher levels of application complexity as the partitions may need to be managed at application level however many databases including MySql can manage table partitioning natively. The challenge then becomes choosing the correct key to partition against. If the partitioning scheme needs to change because of how the data is distributed, for example, it can be tricky to do this at a later date.

Conclusion

In most cases, applications will use a combination of these four strategies to scale up to handle more traffic. Whichever way you choose, be aware that there is no silver bullet and the pros & cons need to be weighed up against each other in the context of the application requirements.

« Supporting the Digital Differentiation Strategy

Team Building in a Hot Market »